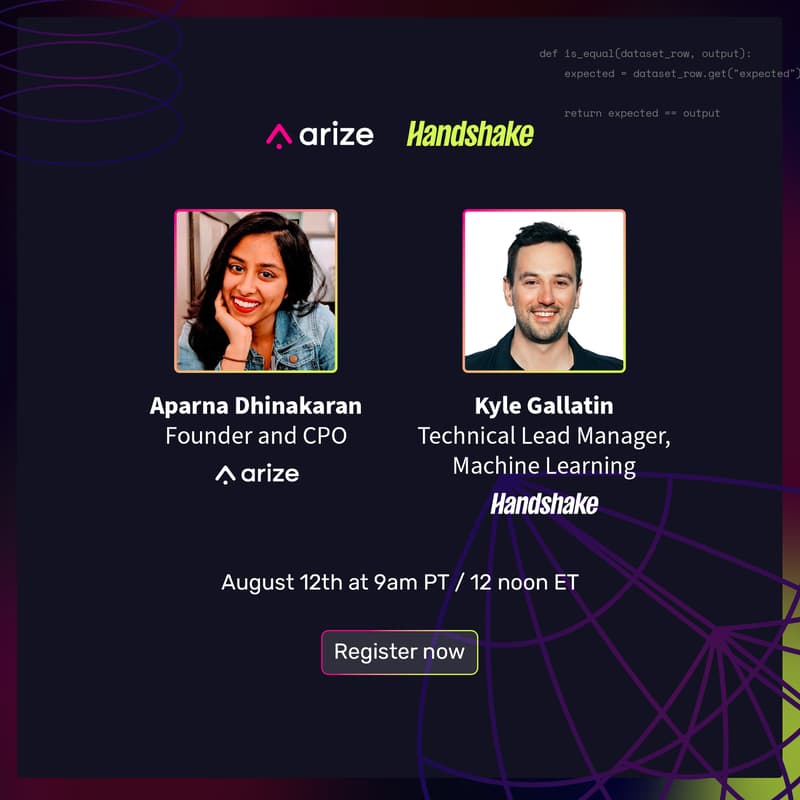

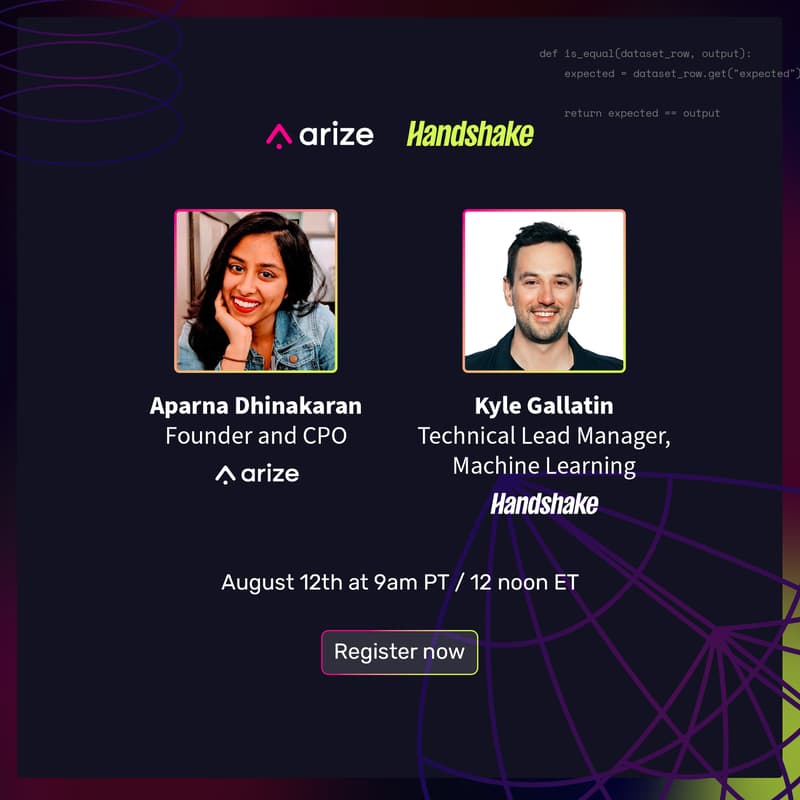

How Handshake Builds a Future-Proofed, Adaptable LLM Orchestration Stack Amid Rapid Evolution In the Space

Join Kyle Gallatin, Technical Lead Manager of Machine Learning at Handshake, and Aparna Dhinakaran, CPO & Co-Founder of Arize AI, for an insightful exploration and discussion on designing robust technical stacks that allow teams to rapidly deploy and scale gen-AI and agent systems.

Handshake, renowned for its innovative career and job matching platform, has developed an orchestration framework enabling product and engineering teams to rapidly spin up tailored, company-specific LLM use cases. This adaptable infrastructure provides plug-and-play integration of diverse new models and frameworks, accelerating deployment and enhancing agility. Complementing this, Arize AI provides full-stack observability, empowering teams to quickly iterate, validate model outputs, proactively manage hallucination risks, and maintain consistency across multiple frameworks.

Together, this approach provides a model for organizations to deploy scalable, reliable LLM pipelines capable of adapting swiftly to new innovations.

What you'll learn:

How To Build Adaptable Technical Infrastructure: Strategies for building flexible, future-proof LLM stacks for rapid deployment.

Scalable Orchestration: Best practices from Handshake's approach to minimize code and accelerate deployment of custom use cases.

Multi-Provider Integration: Techniques to efficiently manage multiple integrations with Claude, Gemini, OpenAI, and DeepSeek through structured YAML outputs.

Prompt Engineering & Evaluation: Collaborative optimization strategies using Arize's real-time tracing and evaluation capabilities.