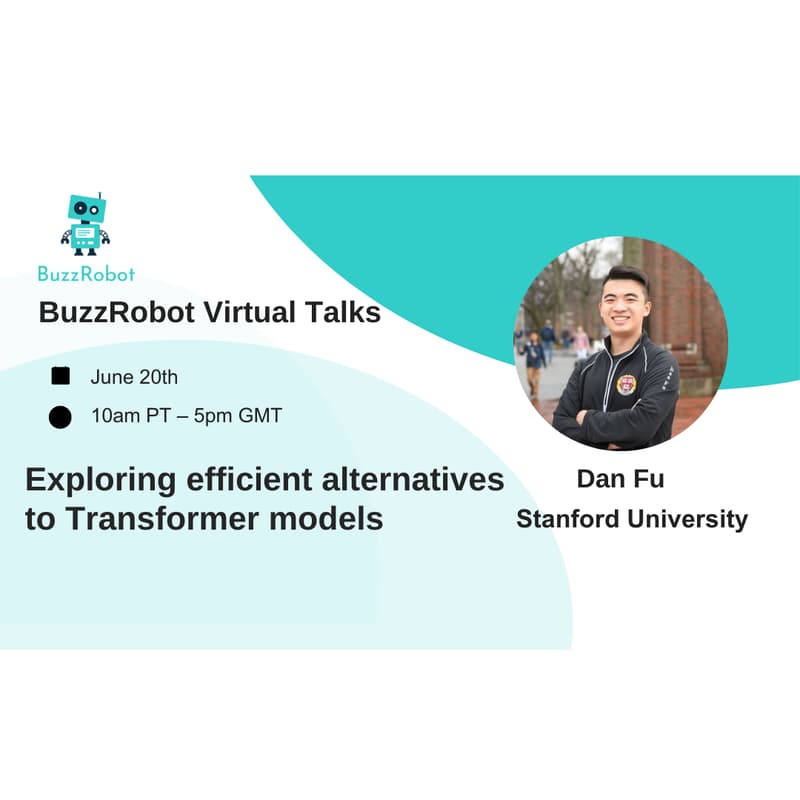

Exploring efficient alternatives to Transformer models

Registration

Past Event

About Event

After years, efficient alternatives to the Transformer (Mamba, Griffin, SSMs, and others) are starting to gain traction. How did these new architectures come to be and what are the key insights behind them?

In this talk, Dan Fu from Stanford University will discuss the key role of simple synthetic languages—associative recall—that was crucial for developing the H3 (Hungry Hungry Hippos) models, the first state space models that were competitive with Transformers in language modeling.

He'll also share his vision on how to connect the synthetics methodology to modern efficient language models.

Join this talk if you are interested in exploring efficient alternatives to Transformer models.