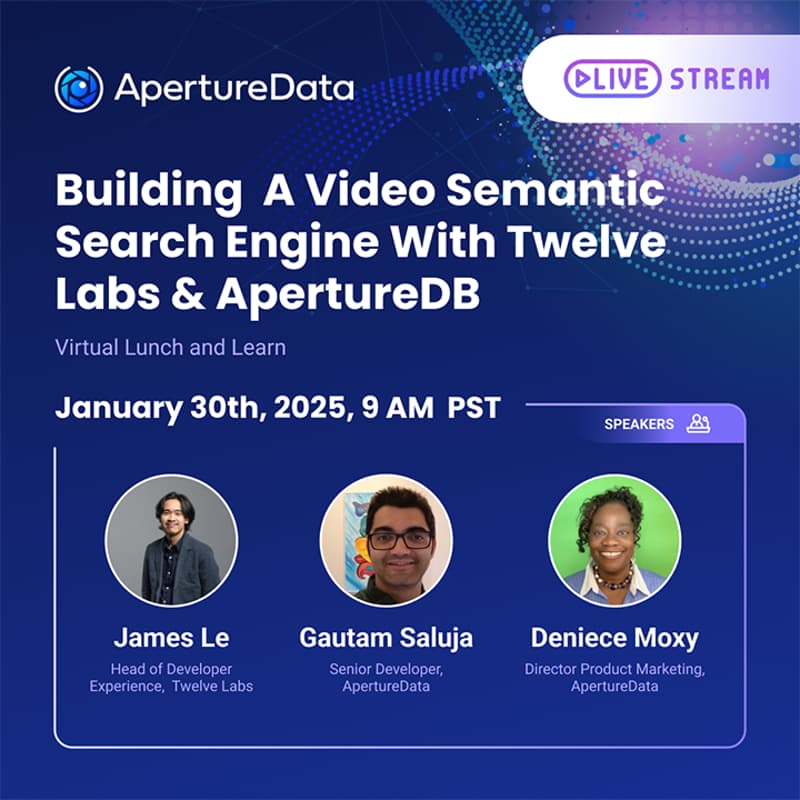

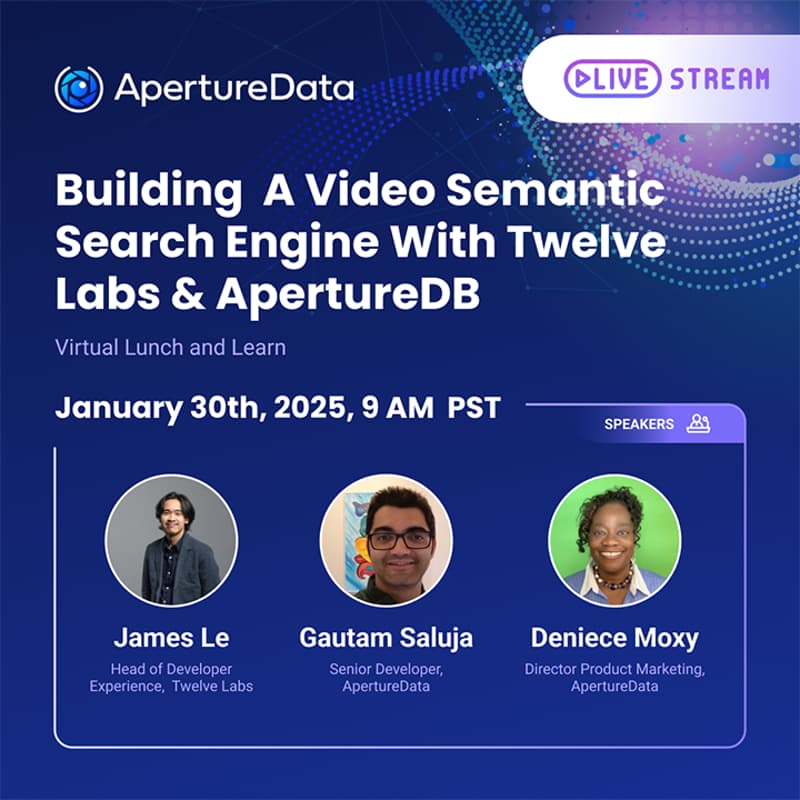

Virtual Lunch & Learn: Building A Semantic Video Search Engine

Join Twelve Labs and ApertureData to learn how to build a powerful semantic video search engine using their technologies.

DATE: January 30, 2025

TIME: 9AM PST

LOCATION: Online

SPEAKERS

James Le - Head of Developer Experience, Twelve Labs

Gautam Saluja - Senior Software Developer, ApertureData

Deniece Moxy - Director Product Marketing, ApertureData

ABOUT LUNCH & LEARN

This hands-on tutorial will guide you through a workflow on how to build a semantic video search engine by integrating the Twelve Labs Embed API with ApertureDB.

Using Twelve Lab's Marengo-2.7 video model, you will learn to generate multimodal embeddings that capture video content. When combined with ApertureDB's vector + graph database, these embeddings enable highly accurate and efficient video semantic search, transforming video analysis for AI applications.

KEY TAKEAWAYS

• Understand the technical challenges associated with managing large volumes of video data.

• Learn how Twelve Labs and ApertureDB collaborate to a provide seamless solution for video understanding applications.

• Explore real-world use cases and best practices for implementing these technologies in your projects.

WHO SHOULD ATTEND

This webinar is ideal for developers, data scientists, and technical professionals involved in video data management, machine learning, and AI applications.

ABOUT SPEAKERS

James Le: A specialist in multimodal AI, James has contributed significant thought leadership to the field of video understanding.

Gautam Saluja: With extensive experience in data management and AI integration, Gautam has been instrumental in developing innovative solutions for complex data challenges.

Deniece Moxy: A seasoned Product Marketing Leader with a passion for AI, Deniece excels at transforming complex technologies into compelling stories that drive product awareness and growth.

Can’t make it to the webinar? No problem! By registering, you will secure on-demand access to the recorded session.