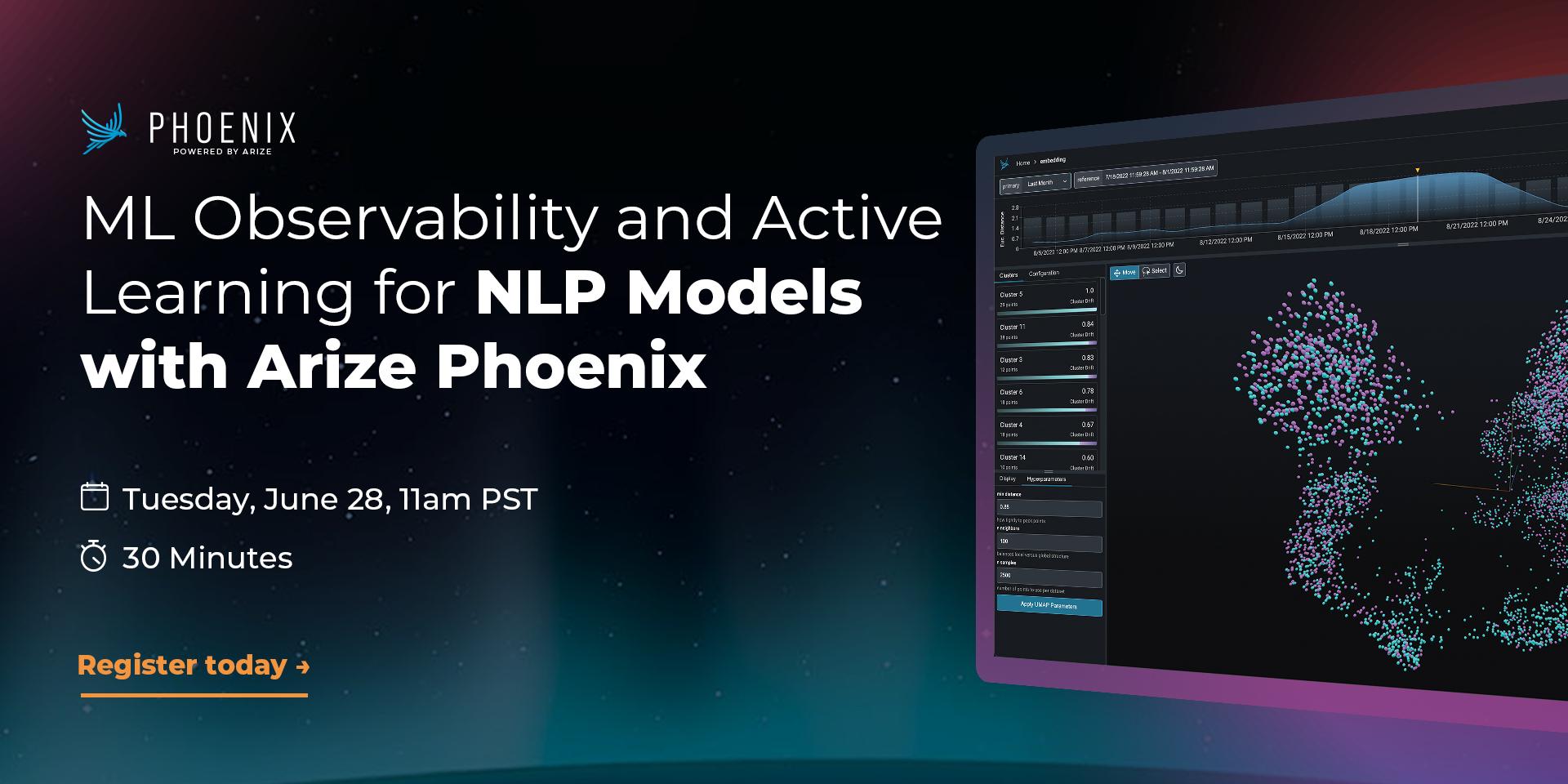

ML Observability and Active Learning for NLP Models with Arize Phoenix

In this hands-on virtual workshop, you’ll use Phoenix, Arize AI's open-source library for ML observability, to monitor an NLP model and identify the root-cause of a production issue.

This workshop will explain the concept of ML observability from first principles. Through concrete examples, you will see how Phoenix makes your machine learning systems observable by visualizing your data, by detecting data quality, drift, and performance issues, and by helping you pinpoint the root-cause of problems so you can resolve them quickly. In particular, you'll follow an active learning workflow to automatically identify and export problematic production data for labeling and fine-tuning of your NLP model.

In this interactive workshop, you will learn how to:

Identify an embedding cluster where an issue is occurring in an NLP model

Pinpoint the root cause of the performance issue

Export the cluster for active learning